AutoArena

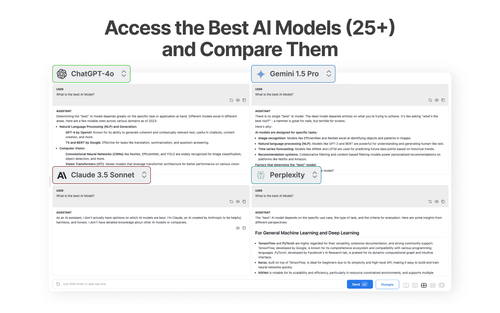

AutoArena is an open-source tool that automates head-to-head evaluations using LLM judges to rank GenAI systems. Quickly and accurately generate leaderboards comparing different LLMs, RAG setups, or prompt variations—Fine-tune custom judges to fit your needs.

Product Description

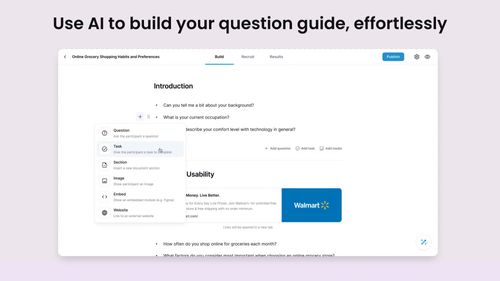

AutoArena is an open-source tool that automates head-to-head evaluations using LLM judges to rank Generative AI systems. It provides fast and accurate rankings by computing Elo scores and Confidence Intervals from multiple judge models, reducing evaluation bias. Users can fine-tune judges for domain-specific tasks and set up automations in their code repository to ensure effective evaluation and integration within development workflows.

Core Features

- Automated head-to-head evaluations using LLM judges

- Generating leaderboards for comparing LLMs, RAG setups, or prompt variations

- Fine-tuning custom judges for specific needs

- Parallelization, randomization, and other features to enhance evaluation efficiency

Use Cases

- Evaluate generative AI systems in CI environments

- Set up automations to prevent bad prompt changes and updates

- Collaborate on evaluations in cloud or on-premise settings

Similar Products

News, events, press releases and research articles about Web3, Metaverse, Blockchain, Artificial Intelligence, Crypto, Decentralized Finance, NFTs and Gaming. Web3Wire has been recognized as one of the Top 15 Web3 Blogs by Feedspot, with 50K+ monthly visitors and growing. We partner with Globe Newswire and PRNewswire, providing distribution for Web3 and crypto press releases. Our coverage includes major events like the Future Blockchain Summit 2024, India Blockchain Summit, and Blockchain Life.

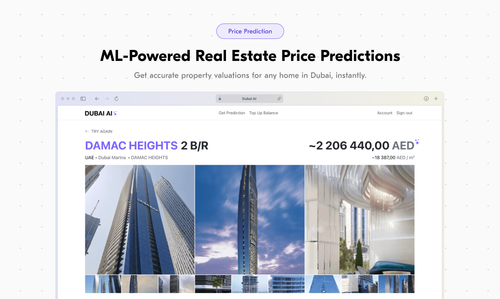

Dubai AI revolutionizes the real estate market in Dubai by harnessing the power of machine learning. Trained on millions of transactions, our AI predicts the market value of any property in Dubai based on key parameters such as location, size, amenities, and more. Dubai AI doesn’t just give a price — it explains the factors behind that price, making it easier for buyers to make informed decisions, sellers to set competitive prices, and real estate agents to communicate value to their clients.